Advertisement

Getting data from one place to another isn’t always as easy as it sounds, especially when that data keeps changing every second. That’s why tools like Kafka and MongoDB are often used together. Kafka helps move real-time data from one point to another, and MongoDB stores it in a way that’s flexible and easy to access.

When you connect Kafka to MongoDB, you create a smooth data pipeline. This setup is perfect for apps that need to collect and use data right as it happens—like tracking users, monitoring devices, or recording transactions. In this guide, we’ll break down how this connection works, why it’s useful, and how you can set it up without needing to be a coding expert.

Apache Kafka is designed to be fast and durable. Rather than sending data directly from point A to point B, Kafka gathers data into a construct known as a "topic." Consider a topic as a mailbox in which messages are deposited. These messages could originate from anything—a website, an app on your phone, or a factory sensor.

Each piece of data (called an event or message) is published by something called a producer. The producer sends it to Kafka, which holds onto it for as long as needed. From there, a consumer picks it up and does something with it—like storing it in a database.

This configuration is perfect when you want to maintain things at a rapid pace and can't lose anything. If a server crashes or a network slows down, Kafka doesn't lose the message. It waits until the consumer becomes available again. This is what makes it perfect for developing real-time systems, particularly when there is a lot of data volume.

MongoDB is different from traditional databases. It stores information in flexible formats, like JSON, instead of fixed tables. This means you can easily add new fields or make changes without restructuring the whole system. That makes it a good match for Kafka’s real-time nature. When messages come in from Kafka, MongoDB can quickly store them in a way that keeps their structure intact.

Let's say you're tracking deliveries for an app. Each message from Kafka might include things like package ID, delivery time, location, and customer name. MongoDB will save this exactly as it arrives without needing to fit it into a strict table format. Later, you can run queries, make charts, or connect them to a dashboard.

Together, Kafka and MongoDB let you collect, move, and store real-time data without delays or complexity. That’s why this setup is popular in logistics, health monitoring, financial services, and many other areas.

Creating this pipeline may sound technical, but it follows a logical flow. You don’t need to build everything from scratch, either. There are tools available—like Kafka Connect—that help link Kafka to other systems like MongoDB.

Kafka Connect is a plug-in tool that acts like a bridge. You install the MongoDB connector into Kafka Connect and then set up a configuration file. This file tells Kafka what topic to listen to and where in MongoDB to send the data.

Here’s what the setup usually looks like:

Let’s say your Kafka topic is called “user_signups.” The sink connector reads every new signup event and inserts it into MongoDB under a collection like “new_users.” You can set how often it pushes the data (batch or real-time), map fields, or even filter messages based on conditions.

This whole process runs in the background, so once you’ve set it up, you don’t need to manually handle every update. The data pipeline keeps working as long as Kafka and MongoDB are live.

The biggest benefit of this pipeline is automation. You don’t need to write complex scripts every time data comes in. Once a message reaches Kafka, it’s on its way to MongoDB with no middle step required.

Another advantage is real-time processing. In older systems, you had to wait for files to be transferred or reports to be generated. With Kafka and MongoDB, your app can react as soon as the data is available. If a user signs up, you can log that in MongoDB and trigger a welcome email instantly.

Scalability is another strong point. As your data grows, Kafka can handle more messages, and MongoDB can store them across multiple servers. Whether you're working with a few dozen messages a day or millions an hour, the pipeline adjusts.

It’s also flexible. MongoDB doesn’t need rigid tables, so as your message structure changes—like adding new fields or updating formats—you don’t have to worry about breaking things.

You get error handling, too. Kafka can replay messages if MongoDB is temporarily down. This avoids data loss, which is critical for financial or healthcare systems.

Putting Kafka and MongoDB together isn’t just a tech trend. It’s a smart way to move real-time data into a format you can actually use. Whether you’re running a live dashboard, collecting user actions, or syncing services, this data pipeline helps you keep everything flowing smoothly. Kafka handles the message traffic, and MongoDB stores the results in a structure you can search, query, and build on. With tools like Kafka Connect, setting this up doesn’t require deep coding—just a clear idea of what data you want to track and where you want it to end up. That’s what makes Kafka to MongoDB a simple setup for today’s real-time apps.

Advertisement

Domino Data Lab introduces tools and practices to support safe, ethical, and efficient generative AI development.

Learn how __init__ in Python works to initialize objects during class creation. This guide explains how the Python class constructor sets instance variables, handles defaults, and simplifies object setup

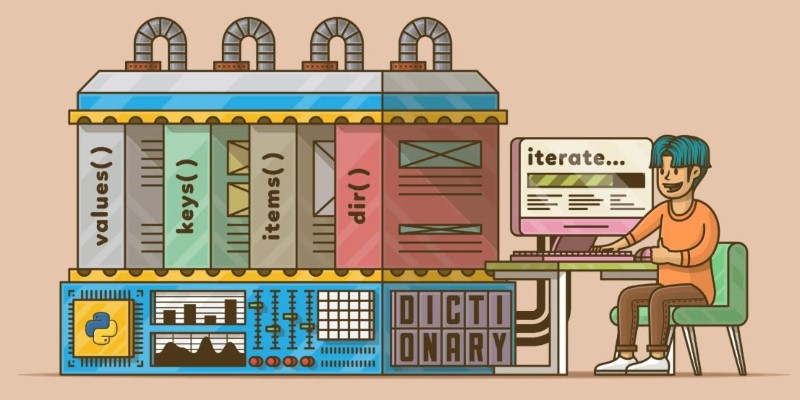

Learn how to loop through dictionaries in Python with clean examples. Covers keys, values, items, sorted order, nesting, and more for efficient iteration

Discover Oracle’s GenAI: built-in LLMs, data privacy guarantees, seamless Fusion Cloud integration, and more.

Learn how the Python list extend() method works with practical, easy-to-follow examples. Understand how it differs from append and when to use each

Need to save your pandas DataFrame as a CSV file but not sure which method fits best? Here are all the practical ways to do it—simple, flexible, and code-ready

Struggling with Copilot's cost or limits? Explore smarter alternative AI tools with your desired features and workflow.

Discover top industries for AI contact centers—healthcare, banking fraud detection, retail, and a few others.

Explore how Rabbit R1 enhances enterprise productivity with AI-powered features that streamline and optimize workflows.

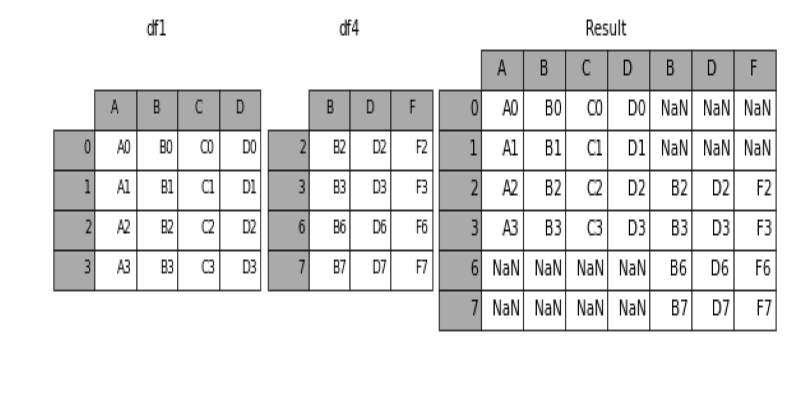

Learn how to concatenate two or more DataFrames in pandas using concat(), append(), and keys. Covers row-wise, column-wise joins, index handling, and best practices

Learn 10 clean and effective ways to iterate over a list in Python. From simple loops to advanced tools like zip, map, and deque, this guide shows you all the options

Explore how developers utilize the OpenAI GPT Store to build, share, and showcase their powerful custom GPT-based apps.