Advertisement

Generative AI has rapidly evolved from a niche research topic into a widely adopted technology. While its creative potential continues to impress, the darker side of its capabilities is catching up quickly. Security concerns are no longer theoretical. With every advancement, the surface for exploitation expands. And this isn’t just a bump in the road. The problems are stacking up in ways that hint they won’t just stick around—they’re likely to grow.

The push for open-source generative models has exploded. From large companies to solo developers, there's been a rush to release models that anyone can run. On the one hand, this promotes innovation. On the other hand, it creates an environment where virtually anyone can misuse them with little oversight.

These models often lack embedded safety layers, which means once they're downloaded, people can tweak or fine-tune them without much restriction. Whether it’s for deepfakes, impersonation, phishing content, or automated disinformation, there's very little standing in the way.

Many public-facing AI tools include filters designed to prevent harmful output. But these filters are far from foolproof. Users have quickly learned how to bypass them using clever phrasing, encoded prompts, or by chaining outputs from multiple models.

This cat-and-mouse game leaves room for persistent loopholes. Developers patch one method, and users find another. Because language is flexible and models are highly responsive, filtering bad output in real time is a challenge that doesn’t seem close to being solved.

Accessibility is a double-edged sword. With low-cost or even free access to powerful models, there's a growing pool of people testing boundaries—not just researchers, but pranksters, scammers, and bad actors. The tools are in everyone’s hands, and many of them are using them in ways that the original developers never imagined.

Scam emails that once looked suspicious can now be clean, personalized, and even emotionally manipulative. Fake job listings, AI-generated fake resumes, and social engineering scripts are increasingly indistinguishable from legitimate content. And it’s not slowing down.

One major issue is the sheer speed of scale. A single person using generative AI can produce content at a level that used to require a team. Fake news stories, social media posts, phishing campaigns—hundreds can be pumped out in minutes, each slightly varied, polished, and believable.

Defenders don’t have the same advantage. Verifying whether content is fake or malicious takes time and human judgment. Automated detectors are improving, but attackers are always a few steps ahead, testing the limits and adjusting faster than defense systems can adapt.

Training from scratch requires resources, but fine-tuning an existing model does not. With relatively modest computing power, anyone can adjust a generative model to reflect biased, misleading, or entirely false narratives. There are already public examples of models fine-tuned to promote hate speech or misinformation.

And since the changes are internal, the output can be made to look clean while still embedding subtle biases. This opens the door to stealthier forms of manipulation, where the message isn't aggressive, but persistently skewed.

When a generative model produces harmful or misleading content, tracking its origin is nearly impossible. Unlike traditional media, which leaves some digital footprint or metadata, AI-generated text and images are clean slates. They don't come with watermarks or creator signatures—unless someone voluntarily adds them.

Even proposed methods like invisible watermarks face issues: they can be removed, corrupted, or avoided. This lack of traceability makes it easy for harmful content to circulate without accountability. And when no one can be held responsible, there’s little deterrent for bad behavior.

As AI output gets more convincing, people are putting too much faith in it. Polished writing, friendly tone, and accurate-sounding information create a false sense of reliability. When content looks and sounds legitimate, it often isn’t questioned, even when it should be.

This over-trust leads to a faster spread of misinformation. It also makes it easier for attackers to influence opinions or trick individuals into taking actions they wouldn't normally consider. If users assume content is "neutral" just because it came from an AI, they're setting themselves up to be misled.

Each company or developer decides how they want to handle safety. Some go through extensive review processes. Others don’t. Without a shared baseline, there's no consistency in how risks are assessed or mitigated. It’s an open field—and the results show it.

While some nations are pushing for regulation, the global nature of AI makes it difficult to enforce anything. One country’s rules don’t apply to someone operating from a different location. So even if one provider pulls back a dangerous model, it might already be uploaded somewhere else, unregulated and easy to access.

Many of the tools used to detect or counter generative AI threats are themselves built using generative models. This creates a strange feedback loop: the same type of system that produces risky content is also being asked to spot and flag it.

This approach has limits. Attackers learn from these detection systems and adjust their tactics accordingly, often using the same models to test whether their output will get flagged. As a result, detection becomes reactive instead of preventive. The more advanced the offensive tools get, the harder it is for defensive tools, built on the same foundation, to stay effective. And when both sides are improving at the same rate, defense usually loses ground.

Generative AI isn’t a new threat—but it is a growing one. The same qualities that make it exciting to use also make it easy to exploit. Open models, clever workarounds, lack of clear oversight, and a global user base that ranges from curious to malicious—it’s all part of a growing storm.

These risks won’t fade with time. They will expand, multiply, and shift in new directions. Security measures are struggling to keep pace with a technology that’s moving faster than most systems were designed to handle. This isn’t just a temporary spike in misuse—it’s a long-term pattern, and one that doesn’t have an obvious off switch.

Advertisement

Discover top industries for AI contact centers—healthcare, banking fraud detection, retail, and a few others.

Explore Pega GenAI Blueprint's top features, practical uses, and benefits for smarter business automation and efficiency.

Box adds Google Vertex AI to automate and enhance document processing with advanced machine learning capabilities.

Amazon explores AI-generated imagery to create targeted, efficient ads and improve marketing results for brands.

Discover Oracle’s GenAI: built-in LLMs, data privacy guarantees, seamless Fusion Cloud integration, and more.

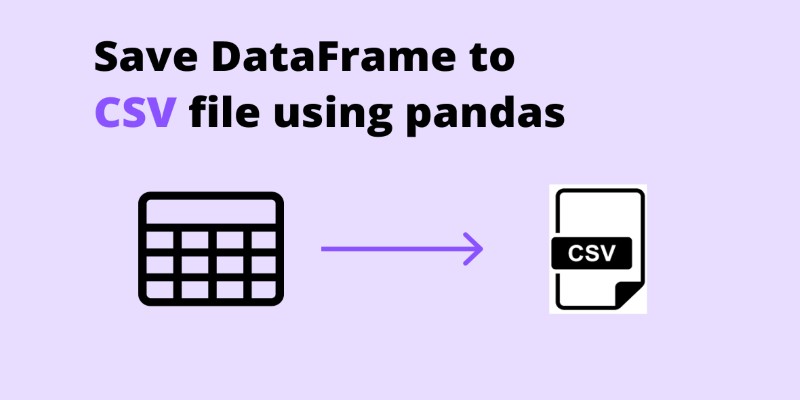

Need to save your pandas DataFrame as a CSV file but not sure which method fits best? Here are all the practical ways to do it—simple, flexible, and code-ready

Highlighting top generative AI tools and real-world examples that show how they’re transforming industries.

Explore how the New York Times vs OpenAI lawsuit could reshape media rights, copyright laws, and AI-generated content.

Domino Data Lab introduces tools and practices to support safe, ethical, and efficient generative AI development.

Learn 10 clean and effective ways to iterate over a list in Python. From simple loops to advanced tools like zip, map, and deque, this guide shows you all the options

Understand deepfakes, their impact, the creation process, and simple tips to identify and avoid falling for fake media.

Learn how to use matplotlib.pyplot.subplots() in Python to build structured layouts with multiple charts. A clear guide for creating and customizing Python plots in one figure