Advertisement

The New York Times vs. OpenAI lawsuit is more than just a legal clash—it's a defining moment in the evolving relationship between artificial intelligence and journalism. Filed in late 2023, the case alleges that OpenAI used millions of NYT articles without permission to train its AI models, including ChatGPT. As the media landscape rapidly changes, this lawsuit could set crucial legal and ethical standards for handling news content in the AI era.

This article explores the key players, what this lawsuit means for media organizations, and the top seven ways it could impact the entire industry.

The New York Times (NYT) is one of the most influential newspapers in the world, known for its investigative journalism and digital presence. OpenAI, on the other hand, is a leading artificial intelligence research company best known for developing ChatGPT.

In December 2023, NYT filed a lawsuit against OpenAI and Microsoft, claiming copyrighted NYT content was used without consent to train AI models. The newspaper argues that this undermines its value, compromises quality journalism, and gives AI platforms unfair advantages by repackaging news content.

This isn't just about two organizations in conflict. It's about:

Let's explore the top 7 Ways the New York Times Vs OpenAI Lawsuit Impacts Media.

One of the biggest outcomes of this lawsuit will be the legal boundaries it establishes. If courts rule that using copyrighted content to train AI models is a violation, it could restrict how tech companies gather data. This would require AI developers to license data, which could lead to the rise of paid content agreements, benefiting publishers.

For Example:

If NYT wins, future models like GPT or Claude would need permission and pay to use large media archives during training. Similar to how the music industry once clashed with Napster over digital file-sharing, this case could reshape how digital data is legally accessed and used.

The lawsuit could push legislators to revisit and revise copyright laws. Currently, the legal framework surrounding AI-generated content and training data remains vague. This case highlights those loopholes, potentially accelerating new laws to safeguard digital media and promote transparency in AI development.

Consider how YouTube had to implement Content ID systems after facing copyright concerns. This lawsuit could trigger similar content-monitoring protocols for AI firms, requiring them to properly trace and attribute data sources. If copyright is updated to explicitly cover data scraping for AI, it will likely lead to stricter enforcement and better content protection.

News outlets may gain leverage to negotiate licensing deals with AI firms. If the NYT wins or reaches a favorable settlement, it would encourage other media organizations to demand compensation for their content. This could foster a more balanced ecosystem where creators are paid for the value they provide to AI systems.

For Example:

A notable example is The Associated Press, which entered into a licensing agreement with OpenAI in 2023, allowing the AI company to legally access its news archive. If this lawsuit results in a legal requirement for such deals, we may see a surge in similar contracts, especially between smaller media and tech companies.

Many people using AI tools like ChatGPT are unaware that the responses are often generated using data extracted from real news sources. This lawsuit highlights how AI content is made, increasing public demand for ethically sourced and transparent AI training data. It could also lead to clearer disclaimers or attribution in AI-generated content.

For Example:

People might notice labels such as "Based on licensed data" or "AI-generated summary from a verified source," which would educate users and promote more informed interaction with AI-generated material. This awareness might influence how educational institutions or businesses approve the use of AI tools.

Public trust in both media and AI is at stake. If AI can copy and alter news content without accountability, it may dilute the source's credibility. This case could lead to a more cautious approach to AI-generated news and enhance mechanisms to distinguish between AI and authentic journalism.

A real-world concern is the spread of misinformation through AI-generated fake headlines or summaries, which appear convincing but are inaccurate. If this lawsuit encourages transparency, users will be better equipped to verify sources and rely on original journalism instead of AI approximations. Maintaining the integrity of journalism is vital in preserving democratic discourse.

Companies like OpenAI may be forced to rethink how they train models. A loss or settlement could require them to license datasets or create synthetic data, increasing development costs and shifting business models. Smaller AI firms might find competing harder to compete, leading to more collaborations between tech and media sectors.

For Example:

Future training models might focus more on synthetic content generated through simulation or public domain data rather than copyrighted journalism. This shift could lead to slower AI advancements in natural language generation, but it may also encourage ethical data sourcing from the ground up. It's also likely to spark innovation in how companies collect and clean training data.

One positive outcome may be the emergence of formal partnerships between AI firms and media outlets. Instead of scraping content, companies may collaborate, share revenue, or co-develop tools that benefit both parties. This lawsuit could become the catalyst for responsible, mutually beneficial AI-media relations.

For Example:

AI companies might help media outlets develop subscription tools, interactive archives, or personalized article summaries using their data, thus enhancing user engagement while maintaining ethical content use. Already, some startups are exploring such models, and if OpenAI shifts in this direction, it could lead to industry-wide adoption of cooperative frameworks.

The New York Times vs. OpenAI lawsuit is more than a headline—it's a major inflection point for the future of media and artificial intelligence. The outcomes of this case could dictate how content is used, how creators are compensated, and how trust is maintained in the digital age. As other media companies watch closely, this legal battle may lead to clearer policies, new partnerships, and greater accountability in AI development. It could also inspire developers to explore transparent AI designs that respect original creators. Journalists, developers, and everyday users all stand to benefit from a more ethical AI landscape.

Stay informed and proactive. Whether you're a journalist, developer, or reader, it's important to understand this case. It helps protect the integrity of content creation in an AI-driven world.

Advertisement

Learn how __init__ in Python works to initialize objects during class creation. This guide explains how the Python class constructor sets instance variables, handles defaults, and simplifies object setup

Learn how to use matplotlib.pyplot.subplots() in Python to build structured layouts with multiple charts. A clear guide for creating and customizing Python plots in one figure

Think generative AI risks are under control? Learn why security issues tied to AI models are growing fast—and why current defenses might not be enough

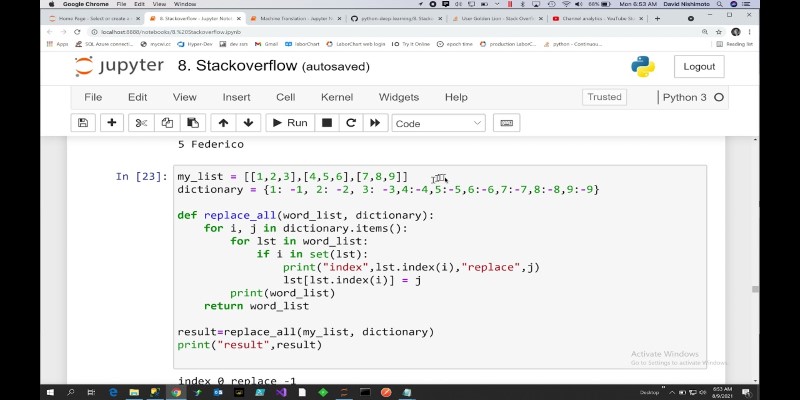

How to replace values in a list in Python with 10 easy methods. From simple index assignment to advanced list comprehension, explore the most effective ways to modify your Python lists

Discover Oracle’s GenAI: built-in LLMs, data privacy guarantees, seamless Fusion Cloud integration, and more.

Salesforce brings generative AI to the Financial Services Cloud, transforming banking with smarter automation and client insights

Need to share a ChatGPT chat? Whether it’s for work, fun, or team use, here are 7 simple ways to copy, link, or export your conversation clearly

Learn how the Python list extend() method works with practical, easy-to-follow examples. Understand how it differs from append and when to use each

Box adds Google Vertex AI to automate and enhance document processing with advanced machine learning capabilities.

Amazon explores AI-generated imagery to create targeted, efficient ads and improve marketing results for brands.

Explore how the New York Times vs OpenAI lawsuit could reshape media rights, copyright laws, and AI-generated content.

Understand deepfakes, their impact, the creation process, and simple tips to identify and avoid falling for fake media.