Advertisement

The race for artificial intelligence supremacy is quickening, and fundamentally, it is a hardware conflict, particularly against the much-used H100 and forthcoming H200 GPUs, Intel's newest product, the Gaudi 3 AI accelerator, questions Nvidia's long-standing dominance in the industry. The need for strong, effective processors is at an all-time high as big language models and deep learning applications keep scaling. Examining Intel's Gaudi 3 across performance, power efficiency, scalability, and ecosystem integration, this article looks closely at how it stacks versus Nvidia's innovative GPUs.

Intel is hoping heavily on Gaudi 3 to flip the tide in its favor. Claiming reduced power consumption and improved training throughput compared to Nvidia's H100, the Gaudi 3 is not just another choice but a real competitor. Intel hopes to provide developers and businesses a strong but reasonably priced solution since it has created this processor from the ground up to suit generative artificial intelligence and large-scale deep learning demands.

Regarding performance, Intel's Gaudi 3 shows outstanding figures. On well-known AI models like -3 and Stable Diffusion, the processor provides 50% quicker training throughput, claims the firm, compared to Nvidia's H100. High bandwidth memory, upgraded processor cores, and a more optimal AI instruction set all help to enable this notable speed leap. Using these architectural modifications, Gaudi 3 may provide fast model convergence times without necessitating a linear increase in power or computational resources.

Conversely, Nvidia maintains its advantage with the H100 and upcoming H200, which use cutting-edge memory bandwidth via HBM3e and sophisticated Hopper architecture. Gaudi 3 shines in training speed, but Nvidia's GPUs are viewed as more well-rounded for inference and adaptability. Their design provides a powerful mix of raw throughput and task flexibility optimised for both AI and conventional computing chores.

Intel's main selling feature for the Gaudi 3 is its exceptional power economy. Gaudi 3 offers higher performance-per-watt performance than the H100, as sustainability and energy costs become increasingly urgent for data centres. Intel has used cutting-edge thermal design, power-aware routing, and AI-specific optimisations to guarantee low energy waste under heavy workloads. The end effect is a processor that consumes less electricity and is quicker.

Although Nvidia's H100 is still competitive, it does use more power than others. While improved connectivity with cloud infrastructure and clever software management help Nvidia solve this, the sheer power drain still favours Intel's Gaudi 3. Gaudi 3's advantages might show up as real cost reductions for companies where power economy is crucial, particularly in large-scale training facilities.

Especially for large-scale model training, memory bandwidth is vital for artificial intelligence performance. The high-speed memory architecture included in Gaudi 3 allows for more simultaneous data transfers and quicker access to big databases. The chip's integration with 96GB of HBM2e (High Bandwidth Memory) guarantees it can manage demanding tasks with as few bottlenecks.

Using HBM3 and HBM3e in the H100 and H200 provides even quicker throughput and larger memory capacity, countering this. Furthermore, improving data transmission rates across GPUs in multi-GPU configurations is the business's NVLink and NVSwitch technologies. Intel has made great progress with Gaudi 3, but Nvidia's supremacy in memory architecture is still impressive, especially for jobs needing distributed training over many nodes.

Intel designed the Gaudi 3 considering scalability. The chip allows companies to use it in their current infrastructure with few changes, as it enables integration in big clusters and is compatible with normal server designs. Intel also offers open software tools to customise and connect with shared artificial intelligence libraries.

The scale narrative of Nvidia is significantly more developed. Having created massive GPU clusters over the years, Nvidia provides deep interaction with its CUDA ecosystem, Kubernetes support, and enterprise-grade deployment tools. Although Gaudi 3 provides a flexible and reasonably priced deployment path, companies may find it growing simpler without considering compatibility or performance trade-offs, as Nvidia's infrastructure tools remain more solid and frequently used.

Ecosystemics and software support are other areas in which Nvidia constantly leads. Developers have a simplified road to create and implement AI solutions using CUDA, cuDNN, TensorRT, and a large spectrum of tools and frameworks tuned for Nvidia GPUs. Most machine learning systems also come with native support for Nvidia GPUs, hence lowering the adoption barrier.

Knowing this disparity, Intel has deliberately worked to grow its software ecosystem. Popular frameworks like TensorFlow and PyTorch support Gaudi 3 because oneAPI and open-source AI community collaborations are enabled. On the Nvidia side, developer knowledge and community support are still strong. Nvidia remains the safest choice for developers and companies, stressing the simplicity of integration and community-driven development.

Intel's Gaudi 3 is a component of a more ambitious effort to reclaim leadership in the field of artificial intelligence acceleration. Intel aims at a segment Nvidia does not control by concentrating on certain pain points like power efficiency and cost-effective scalability. Should Intel keep up this pace, true alternatives to Nvidia's products might help to create a more competitive AI hardware industry.

Meanwhile, Nvidia is not sleeping. The corporation will likely keep leading with its Blackwell architecture, which is under development, and its ongoing investments in artificial intelligence research, software, and alliances. However, the launch of Gaudi 3 has brought significant competition, forcing Nvidia to develop quickly and reconsider hardware configurations and price policies to keep its market dominance.

Intel's Gaudi 3 signals a turning point in the competition for AI hardware. For companies focused on power economy and scalable training performance specifically, it offers a convincing substitute for Nvidia's H100. Gaudi 3 may upend the existing quo with strong architectural changes and a bold price strategy.

Still, Nvidia's solid ecosystem, advanced memory technology, and forthcoming Blackwell architecture guarantee it will remain a major player. The increasing competitiveness for businesses and developers offers more possibilities, better prices, and faster innovation. Intel and Nvidia will be more important in determining the direction of artificial intelligence as its terrain develops.

Advertisement

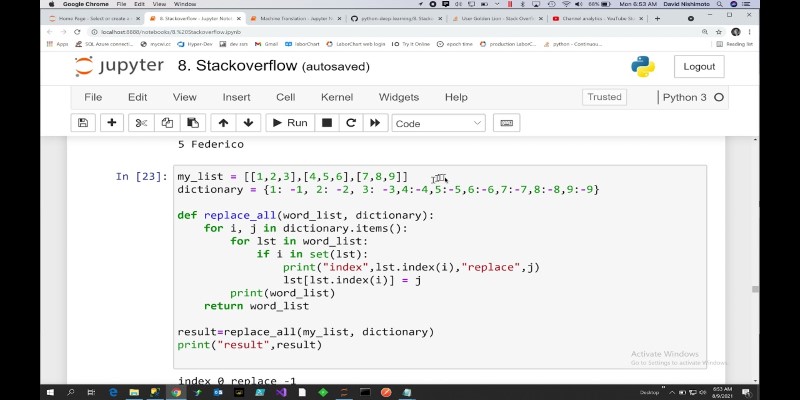

How to replace values in a list in Python with 10 easy methods. From simple index assignment to advanced list comprehension, explore the most effective ways to modify your Python lists

Learn 10 clean and effective ways to iterate over a list in Python. From simple loops to advanced tools like zip, map, and deque, this guide shows you all the options

Discover how to install and set up Copilot for Microsoft 365 easily with our step-by-step guide for faster productivity.

Master the Python list insert() method with this easy-to-follow guide. Learn how to insert element in list at specific positions using simple examples

Amazon explores AI-generated imagery to create targeted, efficient ads and improve marketing results for brands.

Compare Intel’s AI Gaudi 3 chip with Nvidia’s latest to see which delivers better performance for AI workloads.

Discover different methods to check if an element exists in a list in Python. From simple techniques like using in to more advanced methods like binary search, explore all the ways to efficiently check membership in a Python list

Struggling with Copilot's cost or limits? Explore smarter alternative AI tools with your desired features and workflow.

Need to save your pandas DataFrame as a CSV file but not sure which method fits best? Here are all the practical ways to do it—simple, flexible, and code-ready

Learn how to loop through dictionaries in Python with clean examples. Covers keys, values, items, sorted order, nesting, and more for efficient iteration

Discover Oracle’s GenAI: built-in LLMs, data privacy guarantees, seamless Fusion Cloud integration, and more.

Learn how __init__ in Python works to initialize objects during class creation. This guide explains how the Python class constructor sets instance variables, handles defaults, and simplifies object setup